A simple game exploring the human drawings that the algorithm rejects.

Released in November 2016, Quick, Draw! is a simple online game developed as part of Google's A.I. Experiment initiative. In the game, players are given 20 seconds to draw objects based on prompts. Google's artificial intelligence software then tries to identify the drawing. In just six months, the Quick, Draw! dataset accumulated 50 million drawings from over 15 million players across the world. Google has since open-sourced the dataset, encouraging researchers and artists to explore neural networks and cross-cultural drawing behaviors. The gamification of Quick, Draw! epitomizes the spirit of playbor (play + labor); the model encourages Internet users to draw as a form of play, yet these acts of drawing serve as free labor to train Google's neural network algorithm.

As I was exploring the dataset, I was very intrigued by the drawings that were rejected by the algorithm. Why were these particular drawings rejected? If the algorithm was created and trained by the masses, does the rejection of a drawing translate to a rejection from the masses?

The algorithm accepts or rejects a drawing based on a fixed, predetermined threshold. While some of the rejected drawings were clearly off-topic, there were also players who interpreted or drew the prompt in creative, alternative ways. For example, amongst the rejected drawings of "Aircraft Carrier," one player drew a kid carrying an airplane.

Along with the differing cultural interpretations of prompts, the algorithm also rejected drawings that visualized the prompts with different forms of spatiality, temporality, and narration. Based on the feedback from the masses, the algorithm tends to accept objects suspended in white space, while the drawings that attempt to frame an entire scene are rejected. Other limitations to the game include the time constraint and the lack of an erase or undo button.

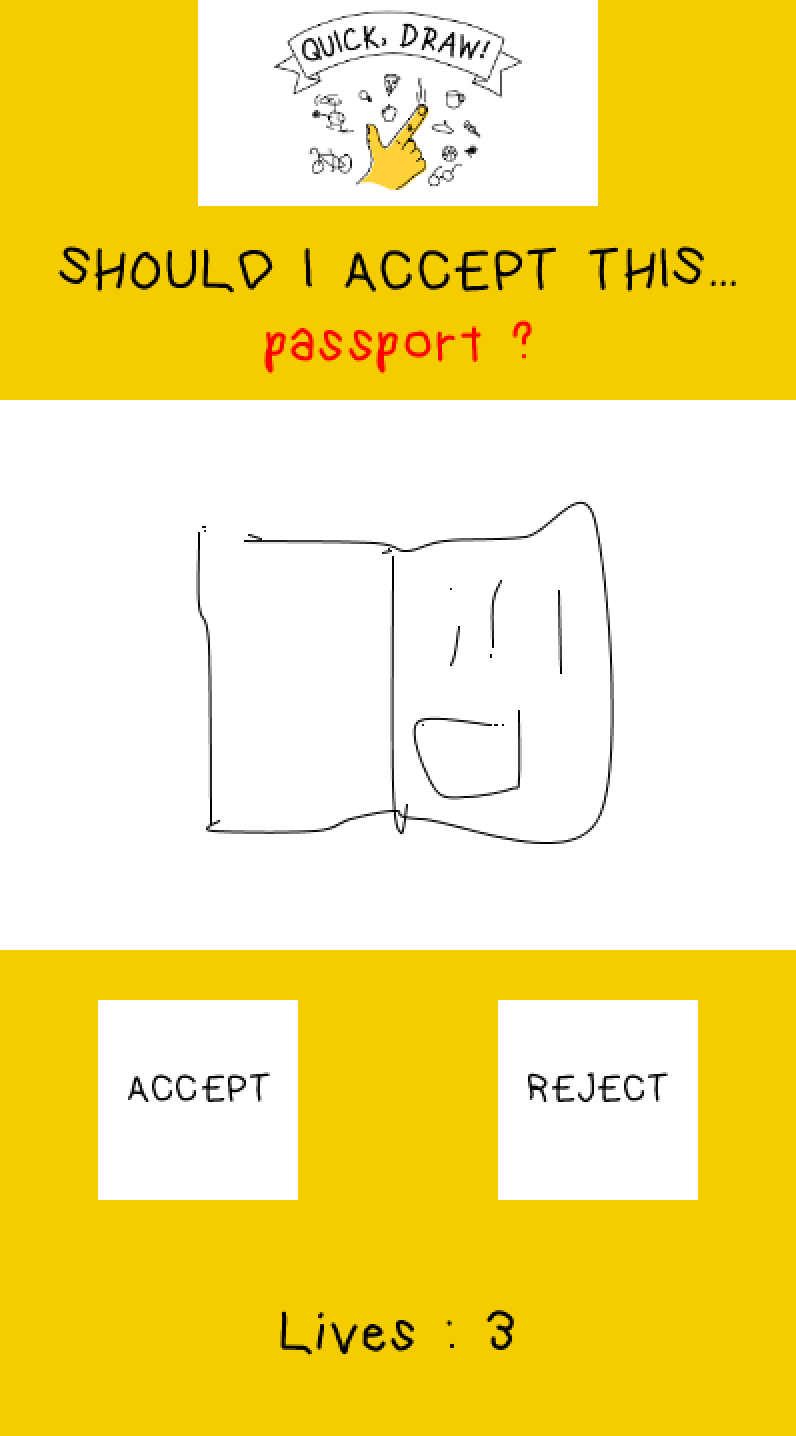

For this project, I want to pay homage to the rejected. In this simple game coded in Processing, players train to become "better" algorithms. They are asked to judge whether a drawing should be accepted or rejected, but the criterion for correctness is based on the algorithm's decision. Thus, the question of "Is this a realistic _____?" shifts to "Does the algorithm think this is a realistic _____?". The shift in rightness and wrongness highlights the cyclical irony in the learning processes of humans and algorithms.

Since algorithms are trained by human labor, what happens when a human tries to become an ideal algorithm?